Introduction

Disclaimer - this is not another McKinsey or Gartner enterprise playbook dressed up for startups. Instead, findings are grounded in conversations with more than fifty engineering leaders and staff engineers across Seed to Series D companies in Europe and the US. Collectively, these teams have raised over $1B+ and are building some of the fastest-moving AI companies today. The patterns here are not aspirational, they are already happening under real delivery pressure.

As a startup it can be hard to know if you are doing things as fast as your peers, adopting the right technologies, staying true to the agility required to navigate product market fit. Most literature about the AI-DLC is written for enterprises, 1000+ employees, who are bound by regulation, Conway's law and many more constraints that make them akin to a cruise liner versus a speed boat!

In startup land, when people talk about “AI adoption,” they often mislabel it as ‘vibe coding’, which frankly is f***ing infuriating. In practice, not everything is vibe coded, the real answer is more nuisance and really belongs on a scale to understand: how deeply AI is embedded across the development lifecycle and how much autonomy teams are willing to give it.

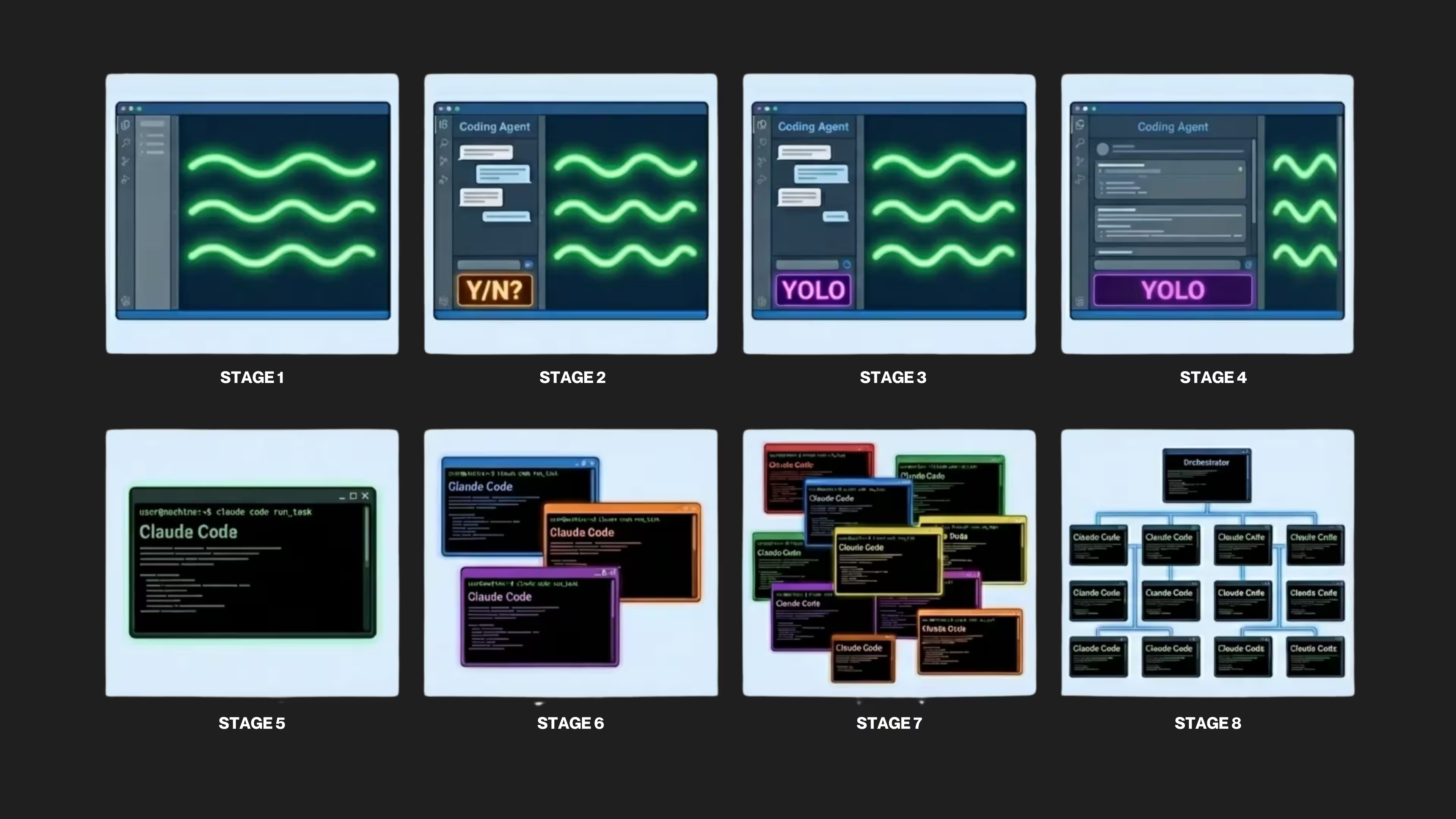

I find the model Steve Yegge uses in his ‘Welcome to Gas Town” article a good mental model to help outline where AI adoption is in terms of autonomy and trust.

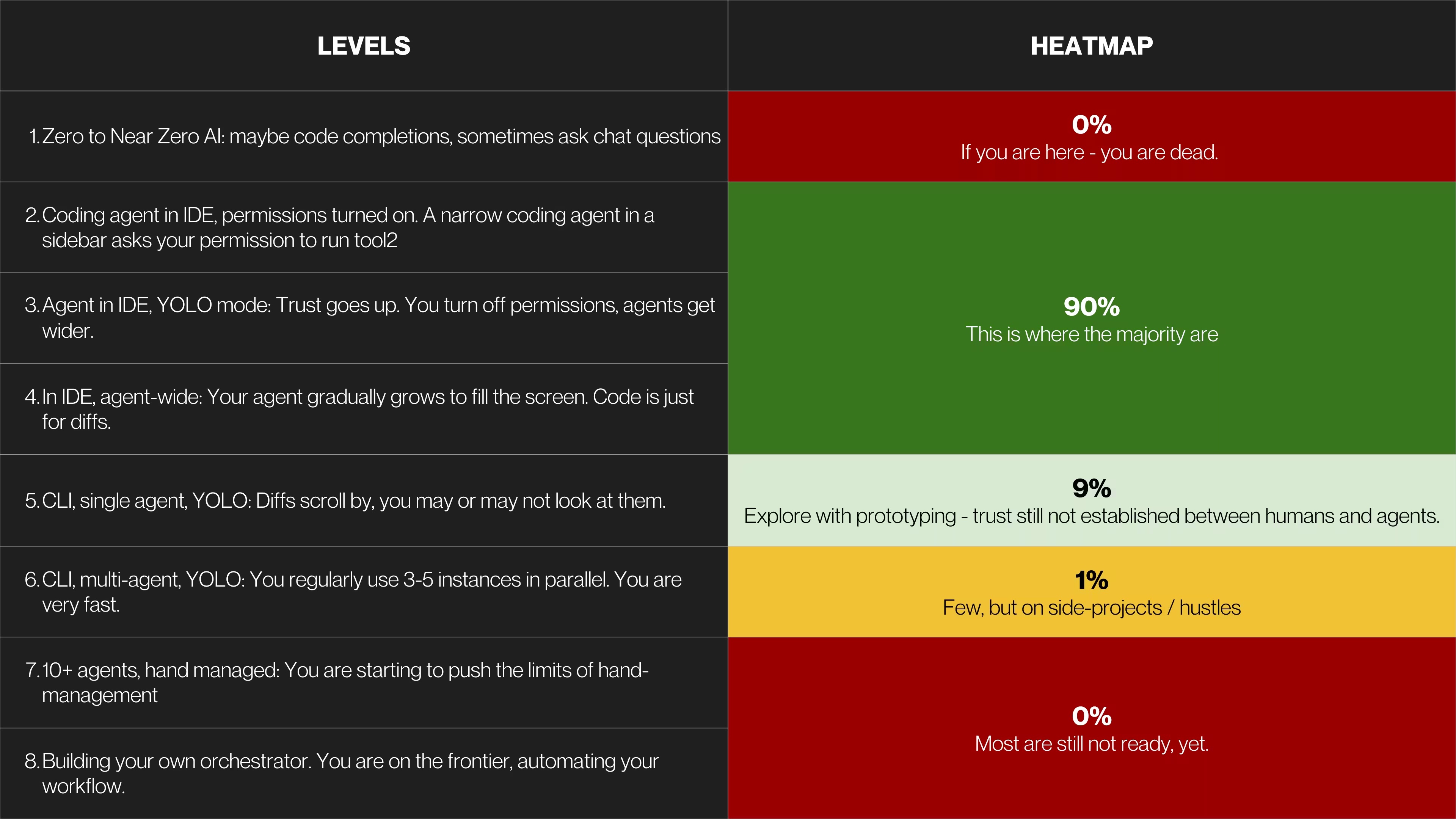

The table below outlines each stage and creates a heatmap of adoption:

At the lowest end of the spectrum are teams that occasionally use code completions or ask chat-based questions. Among high-growth startups, this mode barely exists anymore. Most teams have moved to IDE-based agents that can write code and run tools… but only with explicit permission. This isn’t a technical limitation - it’s a psychological one. The tooling is capable of more. Teams simply don’t trust it yet or have invested in the proper guardrails (or are moving so fast that guardrails aren’t fully baked yet).

As trust increases, permissions are relaxed. Agents act without constant confirmation. The IDE stops being a place where code is written and becomes a place where diffs are reviewed. Some teams push further, running agents directly from the CLI, letting changes stream past faster than any human could realistically inspect. A very small number go even further, coordinating multiple agents or building their own orchestration layers.

Most teams stall before reaching that frontier.

What’s notable is that this stalling doesn’t happen because AI makes too many mistakes. It happens because teams cannot prove that the AI is correct.

Despite wide variation in tooling and maturity, these companies share the same priorities.

Shipping speed comes first.

Quality is not enforced through rigid upfront processes, but through fast feedback loops. AI dramatically accelerates these loops - up until the point where humans must intervene to validate outcomes. A lot of engineering managers we speak to share the same philosophy: fast fail, rapid feedback loops, regenerate is a hallmark of reliability.

Everyone-as-a-‘coder’

These companies also share another trend - more non-engineers are empowered to ship features.

To manage risk, teams implicitly define trust boundaries. Customer-impacting issues remain human-led. Core backend logic is increasingly AI-generated, but reviewed carefully. Frontend and UX work is mostly delegated to agents, with humans approving results. Prototypes and internal tools are fully automated. The approach is rooted in pragmatism. The more visible the blast radius, the more validation is required.

Intent driven development

In the Enterprise, we are seeing the rise Spec-driven development, (the reignition of) test-driven development, and behavior-driven development, but these have not trickled down to startups. Instead, startup teams rely on authoritative context: Combination of PRDs, tickets, designs, documentation, and sometimes tests, all bundled together to express intent and form the origination prompt. The format matters less than the clarity of the outcome.

Startup AI-DLC

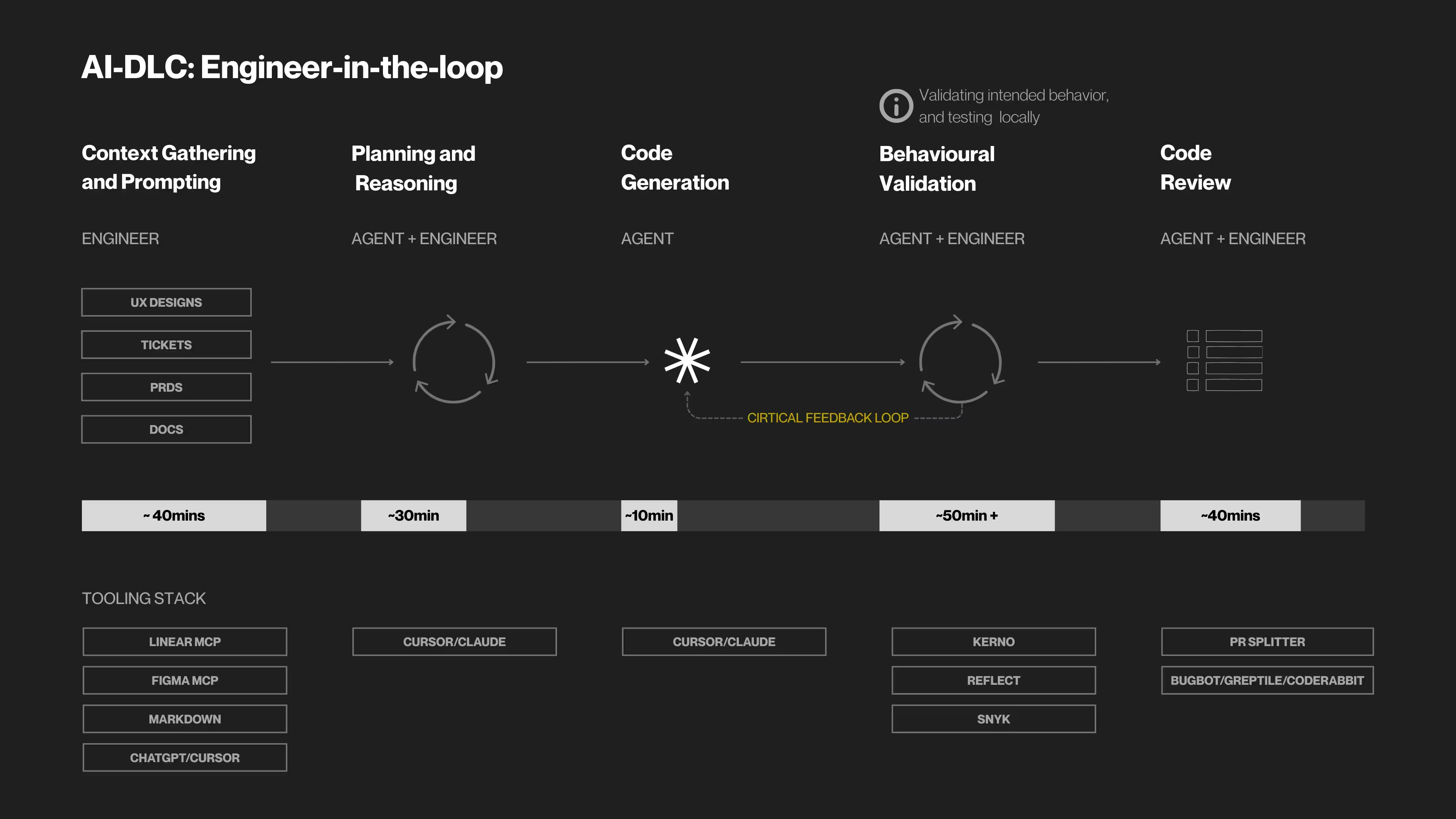

All 50 startups seem to converge around the same process.:

- More emphasis on work up front to provide appropriate context and instructions. Using LLMs to help consolidate and organise the context into a single prompt

- Then using their AI-IDE or Claude, to carry out the planning and reasoning, with engineer-in-the-loop.

- The next step is a new step in the process - validation step. Here the engineer tests the change locally, and tries to understand what new code/behaviours the AI has introduced.

- Then normal service resumes with CI, with some companies opting to use PR splitters to breakdown larger PRs into more digestible chunks (success varies).

Developers now do most of their work and discussion before code is written. They clarify intent, debate tradeoffs, and ensure the problem is well-defined. That context is handed to a reasoning model for planning, and then to agents for execution. Tooling handles scaffolding, security, and test generation. Humans return at the end to review.

But, bottlenecks exist. Truth be told, code was never the problem for a good engineer - it was the layers of scaffolding around it.

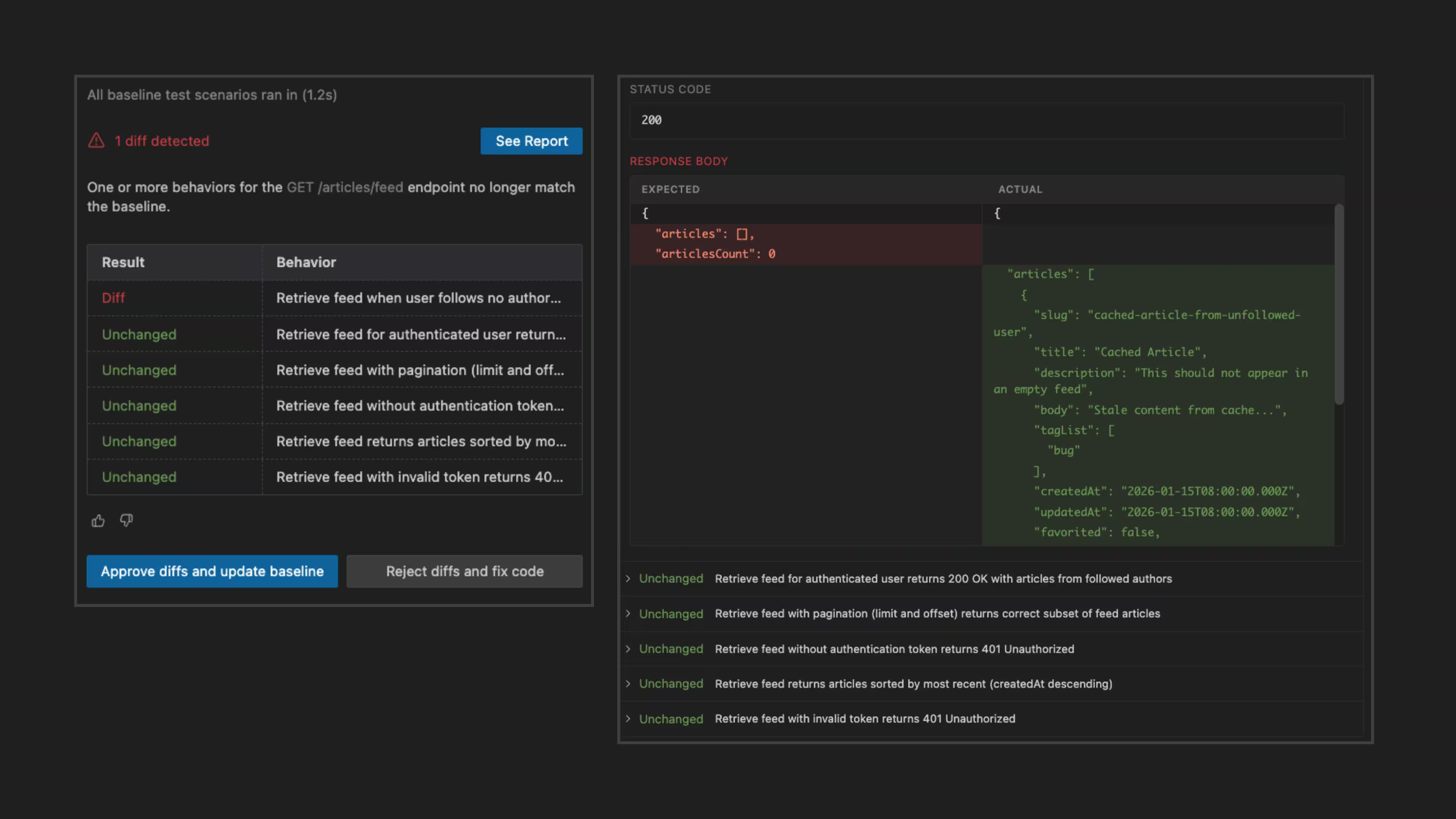

Code review has become the dominant bottleneck. Pull requests are larger. Diffs are harder to reason about. Developers trust code written by other humans more than code written by agents, even when the agent followed instructions perfectly. When intended behavior and actual behavior diverge, the only way to detect it is through manual validation—reading code, replaying scenarios, or waiting for issues to surface in production.

At this point, AI has already moved the bottleneck. Writing code is no longer the slowest part of software development. Understanding whether the code does the right thing is.

Emergence of the Continuous Validation Phase

This is why validation—not generation—is becoming a critical investment for engineering leaders to fully enable the AI development lifecycle.

Validation acts as the bridge between what was intended and what AI has generated. It is how engineer leaders turn autonomy into trust. Without it, every increase in agent capability simply shifts more cognitive load onto human reviewers. Faster code generation produces larger diffs. Better planning produces more complex systems. And without a reliable way to validate behavior against intent, humans are forced to slow everything down. And this is happening before PR, giving engineers faster signals closer to the point of generation.

The frontier teams are not winning because they have better models. They are winning because they are systematically reducing the cost of validation by creating faster feedback loops. They are finding ways to answer a simple question quickly and confidently:

does this behave the way we expect, in production-like conditions?

The next leap forward will not come from agents that write more code. It will come from systems that can validate behavior, surface mismatches, and close the feedback loop before humans become the bottleneck again.

That is the opportunity that these companies are addressing. Closing this gap means faster shipping, better execution and more efficient AI-DLC. Speed of execution directly correlates with product market fit, so payoff is there.

Next bet: Claude as the SDK for the AI-DLC

In the last two weeks, I have had conversations with two very experienced technical serial founders. Both have fully moved away from Cursor, and are all in on Claude. Not just to generate code, but to orchestrate their local stack. Claude skills and hooks, call the appropriate tooling, and developers step when Claude is unsure or when it’s a critical change and requires more human-in-the-loop.

So, while the Gas Town model shown at the beginning of the article shows that there is a lot of trust to be built and diffusion before we get to agentic orchestration, my bet is that startups using Claude (or similar) will get there faster, within the next 6 months.