Introduction

I don’t invest in startups where their current unit costs are 2x of what they charge.

This was a conversation I had with a partner at a well-known Valley based VC firm. He caveatted with, if they are fast growing $15M → $100M in under 18 months, well that is the exception, not the rule.

Currently, LLM providers are arguably subsidising the industry. Reports suggest some complex coding prompts can cost massive sums to process, up to multiple thousands of $$ per query, and the standard $20/month subscription model fails to break even. As energy demand spikes and hardware costs remain high, the era of subsidised inference will likely end. Startups currently spending $60 to earn $20 will face a difficult path to profitability unless they can pivot to enterprise-grade pricing or drastically optimise their stack.

Tracking unit economics

At Kerno, we decided to tackle this problem from day one. We didn’t want to fly blind on our margins, so we built a dedicated testing CLI and LLMOps infrastructure (using tools like Langfuse) to track our unit economics in real-time.

This gives us a real-time view of token costs and rollup costs per test at any time. This is especially important when models are constantly changing and you are trying to eeek everything out of the model before breaking that 200K context window threshold.

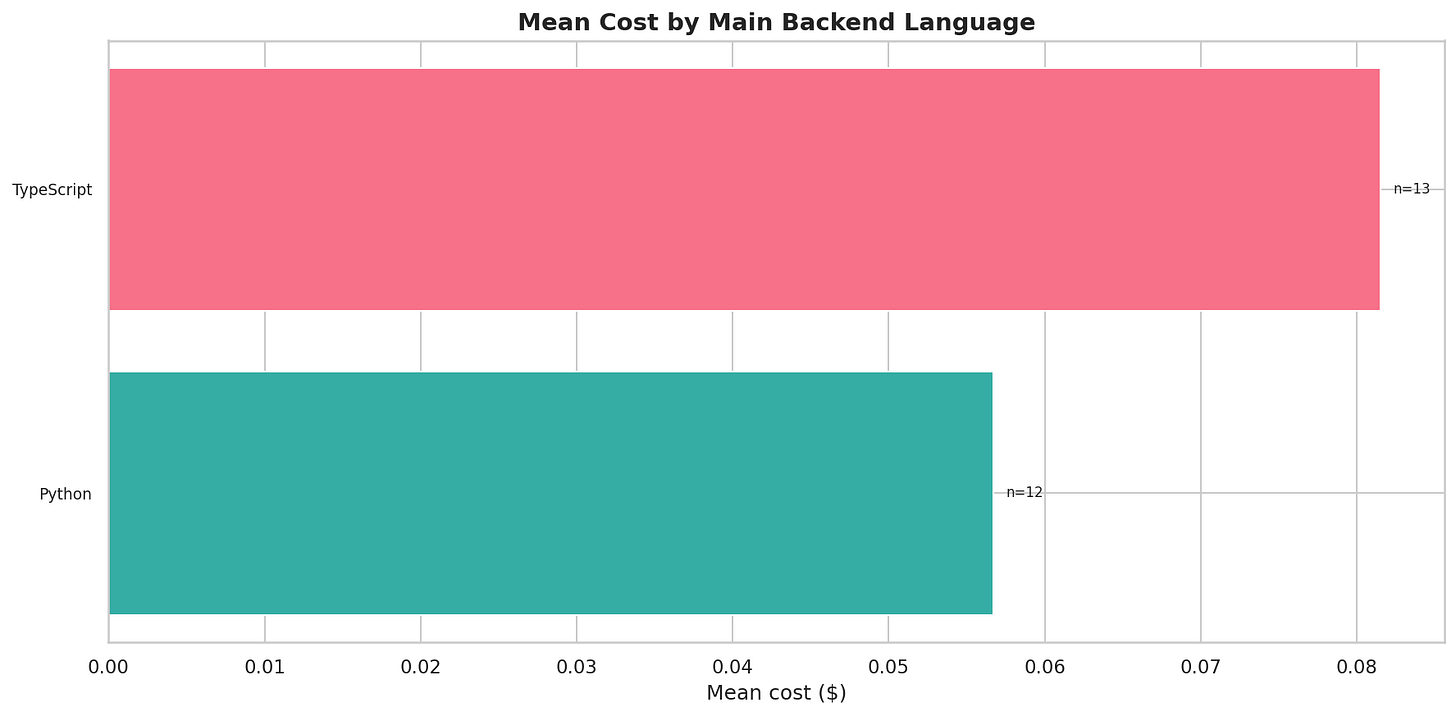

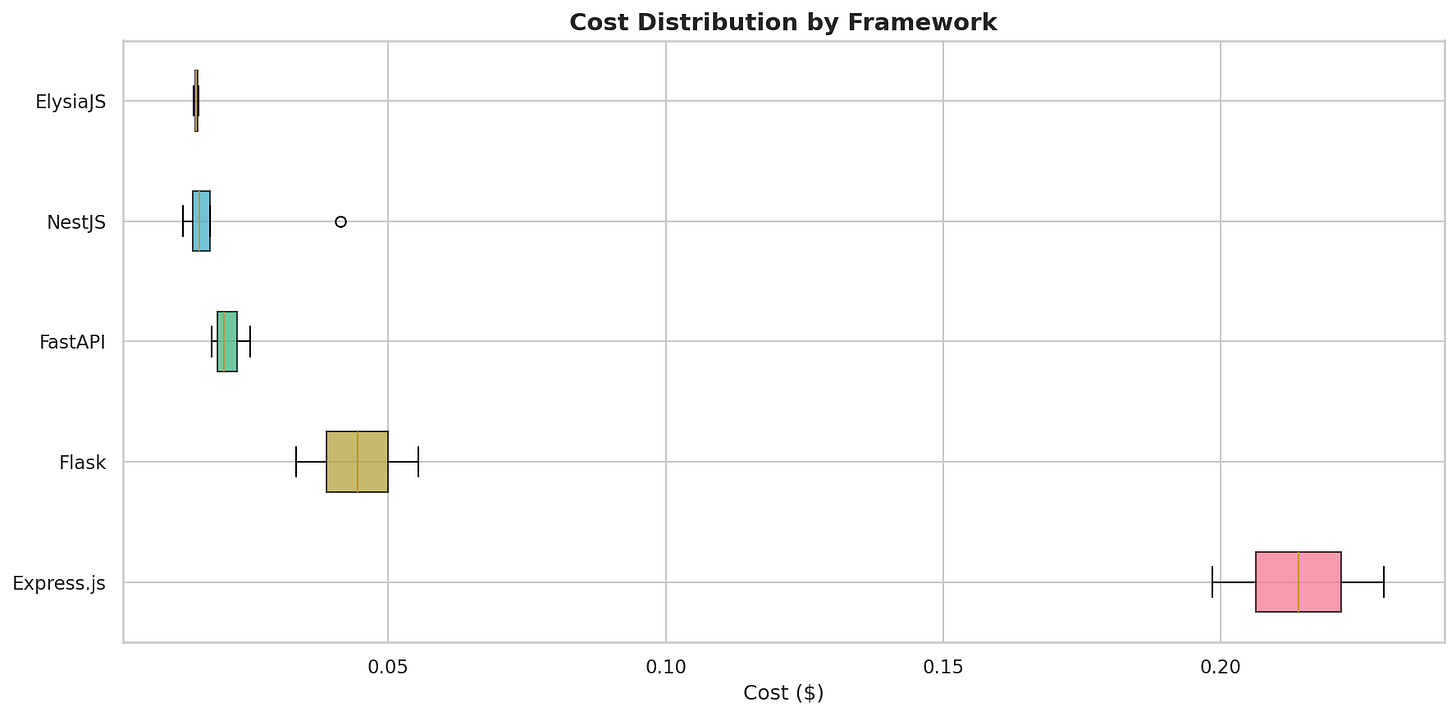

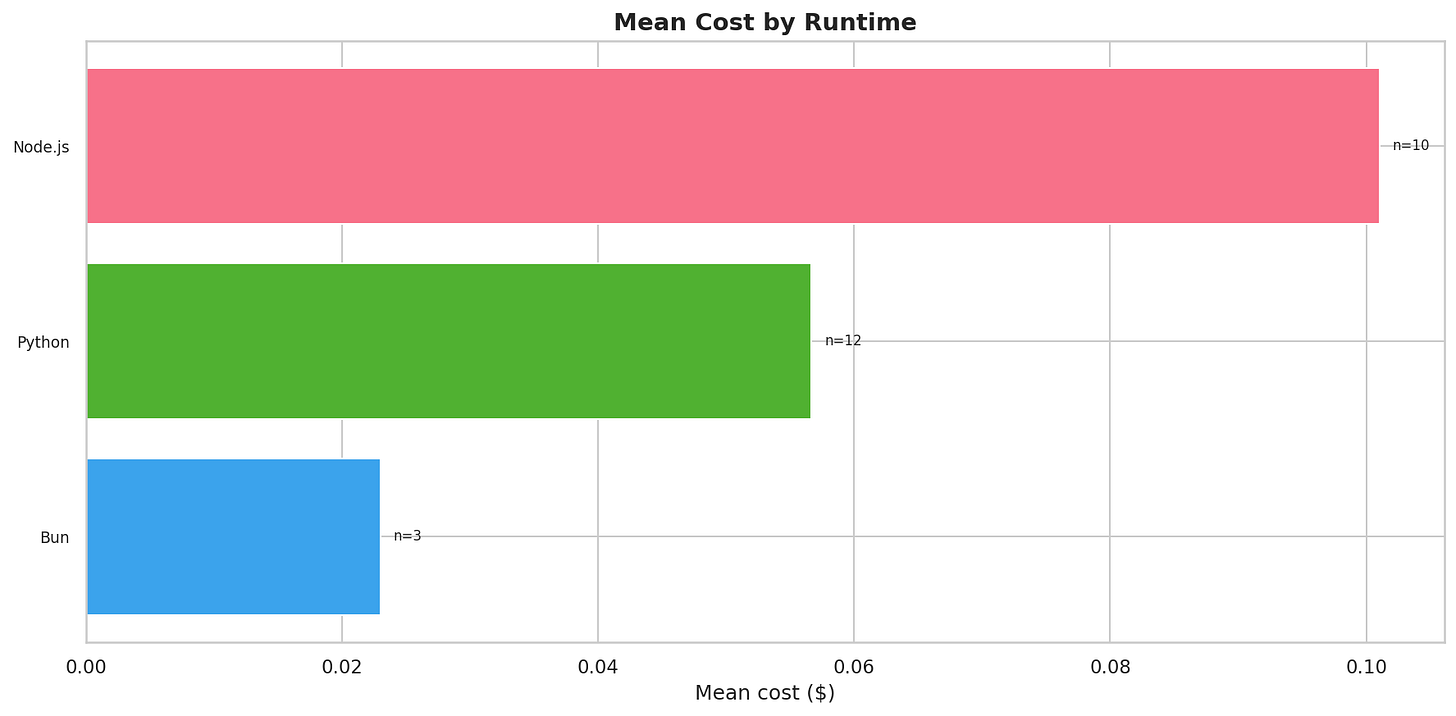

With our testing framework, we can even break this down by runtime,language and framework that we support, and the complexity of the codebase we are testing on.

While the above is a subset of the overall dataset we work within, it provides a flavour of how different variables and support can impact the cost.

Beyond operational efficiency: why visibility matters

While knowing our margins is the primary driver, this visibility provides several other strategic advantages:

- Sustainable Open-Source and Free Tier Version

- We want to support the community. Our free/trial tiers offer full-functionality tests because we want engineers working on open-source or side hustles to use Kerno without friction. Detailed cost tracking tells us exactly how "generous" we can afford to be. It allows us to set limits that protect our business while maximizing the value we give to free users, ensuring our free tier remains a sustainable part of the ecosystem rather than a "burn" we eventually have to kill.

- Benchmarking for “Bring Your Own Model” (BYOM)

- Enterprises often have preferred providers or private instances (e.g., Azure OpenAI or Bedrock). Because we track our metrics so closely, we can provide customers with accurate benchmarks. If a customer wants to run Kerno on their own infrastructure, we can tell them—with data—exactly what their projected compute bill will look like based on their specific codebase.

- Optimising the Agent-Chain

- Not every task requires a frontier model like GPT-4o or Claude 3.5 Sonnet. Our data helps us identify which parts of our agent-chain are “over-powered.” We are currently experimenting with routing simpler tasks to open-source models (like Llama 3 or Mistral) to reduce costs without sacrificing the quality of the output.

Still more work to do…

We’re still iterating on this, but keeping the data front and center has already changed how we architect our agents. It’s a work in progress, and we’d love to hear how other teams are tackling the "token tax" in their own stacks.

If you’re interested in the technical side of how we actually wired this upthe stuff involving our CLI and the testing pipelines—Antoine wrote a great deep dive on it over on the Kerno blog.